The objective of this lab was to use a mobile device and a desktop to utilize an online tutorial from ESRI called Survey 123. This is an effective app that gathers data from the field that can be used for many different applications. To start this exercise the HOA emergency preparedness survey was completed. From there the tutorial covered how to make a survey and how to fill out, analyze and share the data from the survey. Screenshots from the exercise will aid in showing how the process was completed also maps will be displayed that show what the data from the survey was portraying. Survey 123 is an effective tool for use in the field because it allows the user to upload there surveys almost instantly upon collection. This is a very convenient program that allows users to quickly and effectively set up professional surveys.

Methods

|

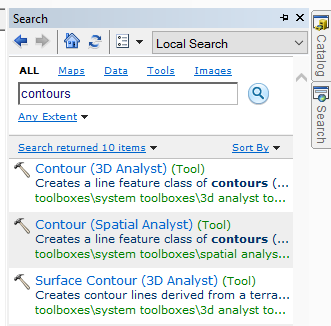

| Figure 1 |

All of the questions in this survey had to deal with the HOA emergency preparedness, which helps ensure that the homeowners association can plan for potential disasters just as floods and earthquakes. Throughout the creation of this survey, 29 survey questions were made. Each of the different tabs displayed in figure 1 have a particular use. The number tab was good for just that, numbers, meaning values like the number of fire extinguishers or the number of days since fire alarms had been checked. Whereas multi line text allows the survey taker to type a response which can have a minimum or maximum character designation. The most frequently used question format was single choice, which means that the survey taker is only offered a yes or a no question, though there may be a drop down question that appears if the user selects yes.

|

| Figure 2 |

Results

|

| Figure 3 |

|

| Figure 4 |

Figure 3 above left shows a screenshot that was taken off of a smartphone, while figure 4 to the right shows a screenshot from a desktop unit. This is to show that the survey was taken on multiple devices, in an effort to see the differences and to get a change to work with both options. The survey was completed a total of 6 times and each time the answers written down were changed up, along with the location, one exception being there were two surveys completed in Eau Claire.

After the survey was completely filled out 6 times, there was now data that could be seen about the survey. Each of the tabs in the top right of the website offer a different application of the data collected from the survey. Under the analyze tab the user can see a map of many of the different questions asked. On top of that there are proportional symbol maps that were created, and bar graphs, pie charts and graph that shows when the surveys were taken. Under the overview tab the user can see the total number of participants, meaning the number of different people who have taken the survey. Next under the collaborate tab, as shown in figure 5 below, the Survey 123 user can change the settings of who can see the survey that was created. Due to the fact that the survey that was made was just a tutorial it was set to only members of the UWEC geography and anthropology.

|

| Figure 5 |

|

| Figure 6 |

Figure 6 shows that the survey was taken 6 times in order to get some data to look at. The more times this survey is taken the better the data will be. Also having real data from a number of different people would make this site very interesting.

|

| Figure 7 |

Figure 7 which is pictured on the right shows a copy of the data being made. This is done in case there is something that the maker of the survey finds wrong after publishing the survey. Once it is published, changes can not be made. Though through the use of the copy, changes could be made and the survey could be published correctly as a new survey.

Figure 8 below is a screenshot that shows where the survey locations were, meaning where the house or apartment was from. This can all be done from with the Survey 123 site. This can easily be turned into a map using illustrator or by importing it into ArcMap. Even the most inexperienced user could use this program by following the directions that were laid out in the tutorial.

|

| Figure 8 |

|

| Figure 9 |

Conclusion

Survey 123 is a very effective way of creating a survey. It is extremely professional and it will definitely have many applications in the future. The surveys are easy to use and take, meaning that they do not take the survey taker long at all. From there the data is displayed by the program very nicely, it is easy to see the distributions of data and what they mean. This application has endless uses in the geography discipline. Surveyors could use it to ask if what they are doing is effective or what they could be doing differently.

Sources

https://learn.arcgis.com/en/projects/get-started-with-survey123/lessons/share-your-survey-data.htm

https://learn.arcgis.com/en/gallery/

https://learn.arcgis.com/en/projects/get-started-with-survey123/